About this Project

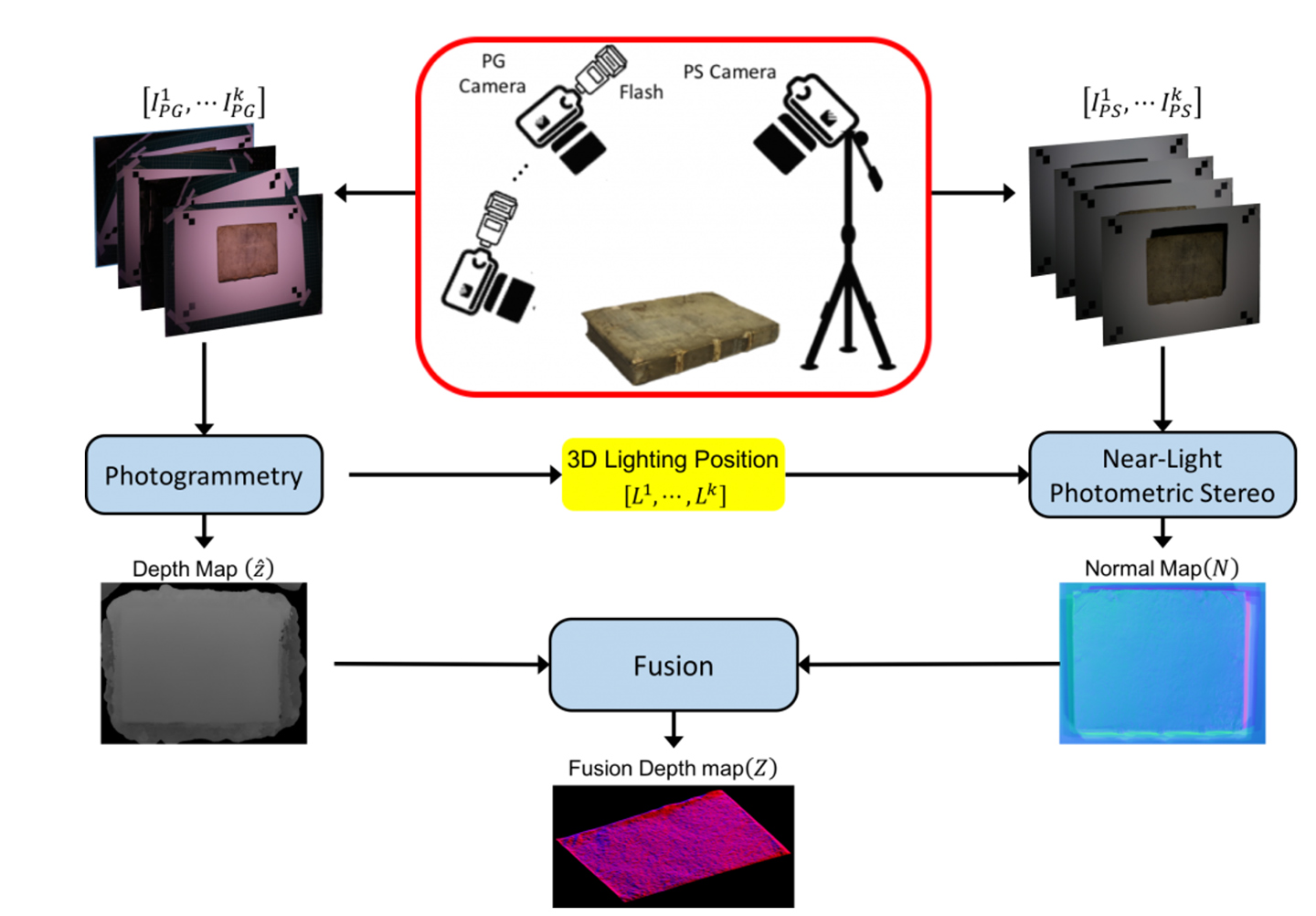

We have developed a new technique, by using a witness camera (PG camera) attached to a flash light source and photogrammetry to find the 3D light positions, for more robust photometric stereo 3D surface reconstructions. The estimated 3D light positions are more accurate than other near-light position estimation and consequently produce more accurate surface normal than conventional far-light photometric stereo. We also demonstrate that the PG surface information can be fused with the PS normal map output for surface reconstruction that generates globally accurate 3D shape with high-quality micro surface details.

The Method

We propose a streamlined framework of robust 3D acquisition for cultural heritage using both photometric stereo and photogrammetric information. An uncalibrated photometric stereo setup is augmented by a synchronized secondary witness camera co-located with a point light source. By recovering the witness camera’s position for each exposure with photogrammetry techniques, we estimate the precise 3D location of the light source relative to the photometric stereo camera. We have shown a significant improvement in both light source position estimation and normal map recovery compared to previous uncalibrated photometric stereo techniques. In addition, with the new configuration we propose, we benefit from improved surface shape recovery by jointly incorporating corrected photometric stereo surface normals and a sparse 3D point cloud from photogrammetry. We use two Canon 5D Mark III cameras with

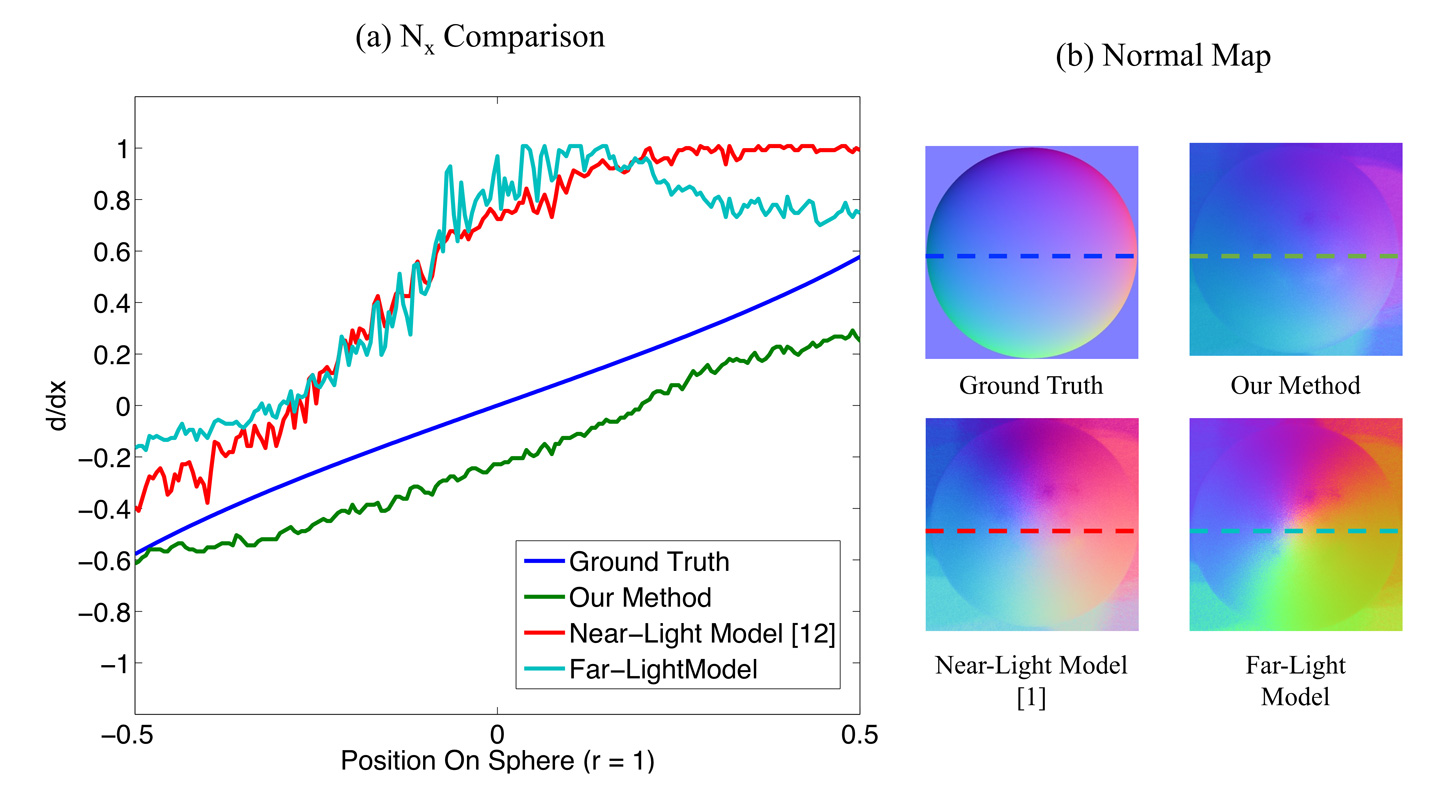

Normal Map Accuracy for a Sphere

Comparison between the X component of the estimated normal map for a sphere. The ground truth (shown in blue) normal for the sphere closely resembles a line (the gradient of a parabola is exactly a line). The normal estimate computed using the far light assumption (shown in cyan) and the uncalibrated photometric stereo method from Huang et al. (shown in red) both produce significant errors. Our method (shown in green) accurately estimates the 3D light position and therefore produces the most accurate 3D normal.

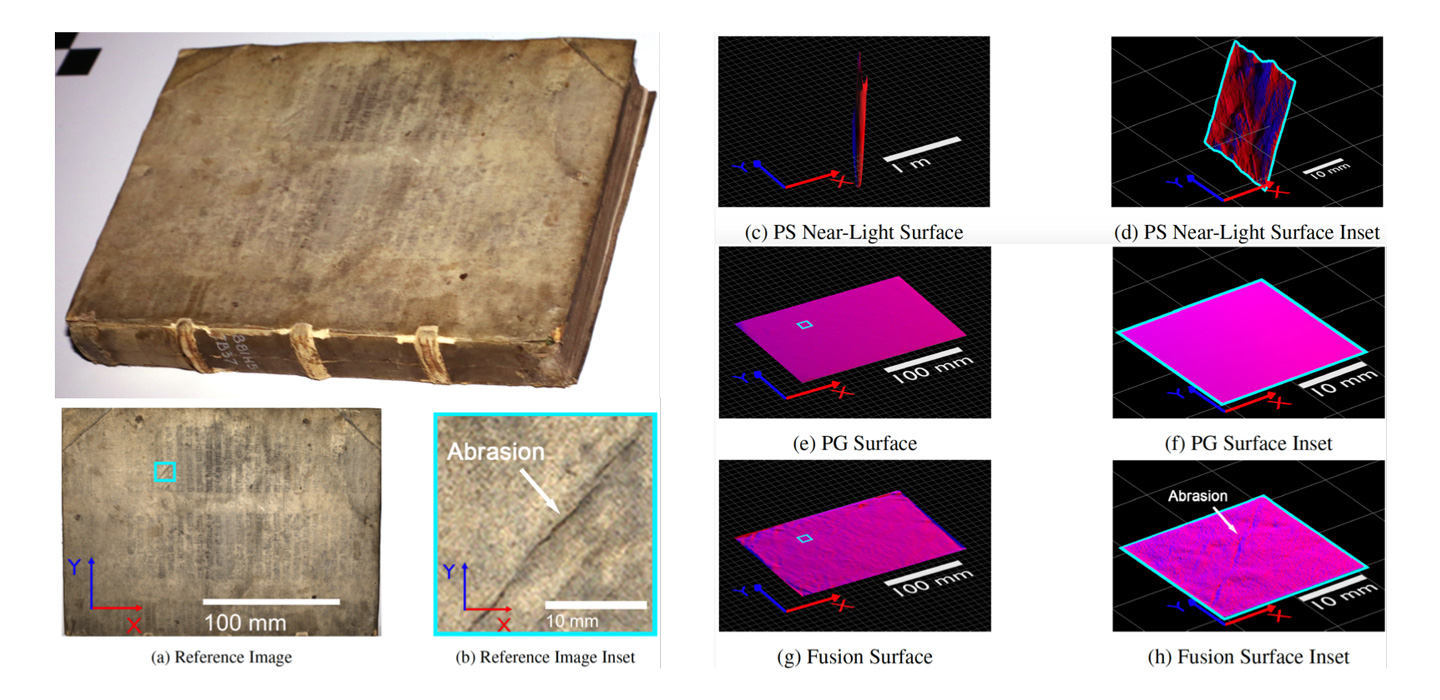

Fusion Surface Reconstruction: 16th Century Book

We chose to test the visual fidelity of surface fusion reconstructions using a cultural heritage object from our University's rare book collection, an object representative of the intended use case for this technique. The top surface of the book shown in (a), and hi-resolution inset (b), corresponding to the outlined region to the left. After surface recovery, these results are depicted in orthographic perspective and illuminated by a red directional light along the X-axis and a blue directional light along the Y-axis to reveal surface details without exaggerating the scale of the Z-axis. The PS reconstructions using the method from Huang et al., shown in (c) and (d), exhibit severe global geometry errors due to lack of absolute reference points (the scale in these images were reduced to accommodate the extreme range of Z-axis values). PG output from Agisoft Photoscan is shown in (e) and (f). Our fusion results, produced by optimizing the surface for consistency with both PS and PG results are shown in (g), (h). Note that the fusion results exhibit a balance of course of geometric accuracy (a flat book surface) while retaining small surface variations.

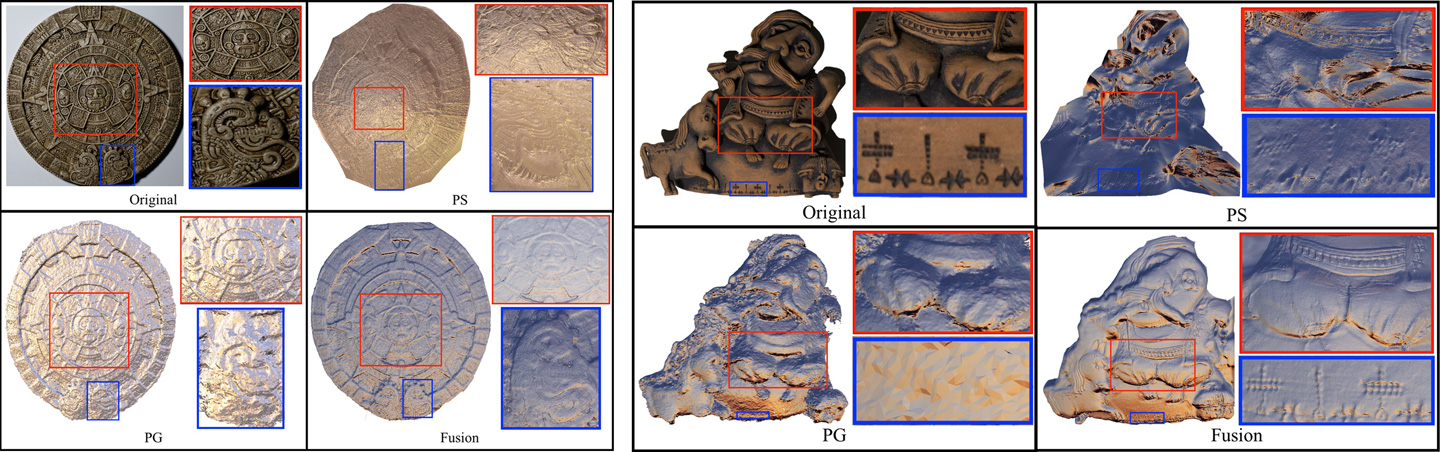

Fusion Surface Reconstruction

We tested our framework on several objects with complex geometry and fine surface detail. We tested Chinese Jade Bi Carvings (left) and Hutsul Ceramics Ukraine Terracotta sculpture (right). These objects demonstrate that our system produces a good balance between global geometric accuracy and micro surface details. 3D reconstruction results using only photometric stereo (PS), and photogrammetry (PG) are shown for comparison. Our fusion results clearly demonstrate superior 3D reconstruction quality.